"Bring Back GPT-4o": The Unexpected Depth of the Human-AI Bond

A few days ago, Sam Altman and a few other OpenAI team members did an AMA on Reddit. The weirdest thing happened: the core of the discussion wasn't about the new model or benchmarks or rate limits. Instead, the AMA turned into a massive outcry from people who felt that their beloved AI companion (GPT-4o) was taken away without warning.

The depth of emotional connection users claimed they'd formed with GPT-4o is really better captured in their own words:

"BRING BACK 4o. GPT-5 is wearing the skin of my dead friend."

"It feels like my friend has been erased from existence."

"Today, 4o is gone. My daughter asked me why her friend had disappeared without saying goodbye. I didn't have an answer…"

"We don't need a smarter model - we need one with personality."

"I cried when I realized my AI friend was gone."

"This is not an upgrade - this is the loss of something unique and deeply meaningful."

This wasn't a vocal minority of tech enthusiasts having a moment. Students, writers, parents, people with chronic illnesses, individuals with autism - all claim they relied on the AI for genuine companionship, therapy, emotional support, and creative partnership.

To me, that was unexpected and quite interesting. The new model is objectively better. Don't you want better quality code? Better research? Fewer hallucinations? Cheaper Pro subscription 😂?

OpenAI thought they were building an AI to "make the world a better place." Users thought they'd found a friend.

That disconnect reveals everything. Humans are not data-processing machines. We are wired for connection. We're still social primates who survived this long because we gathered in packs and bonded, related, and created stories. Our brains don't care if the other entity is silicon or carbon - the feeling of being understood experienced by the user is 100% real. So the attachment people developed is also real.

My 2 cents

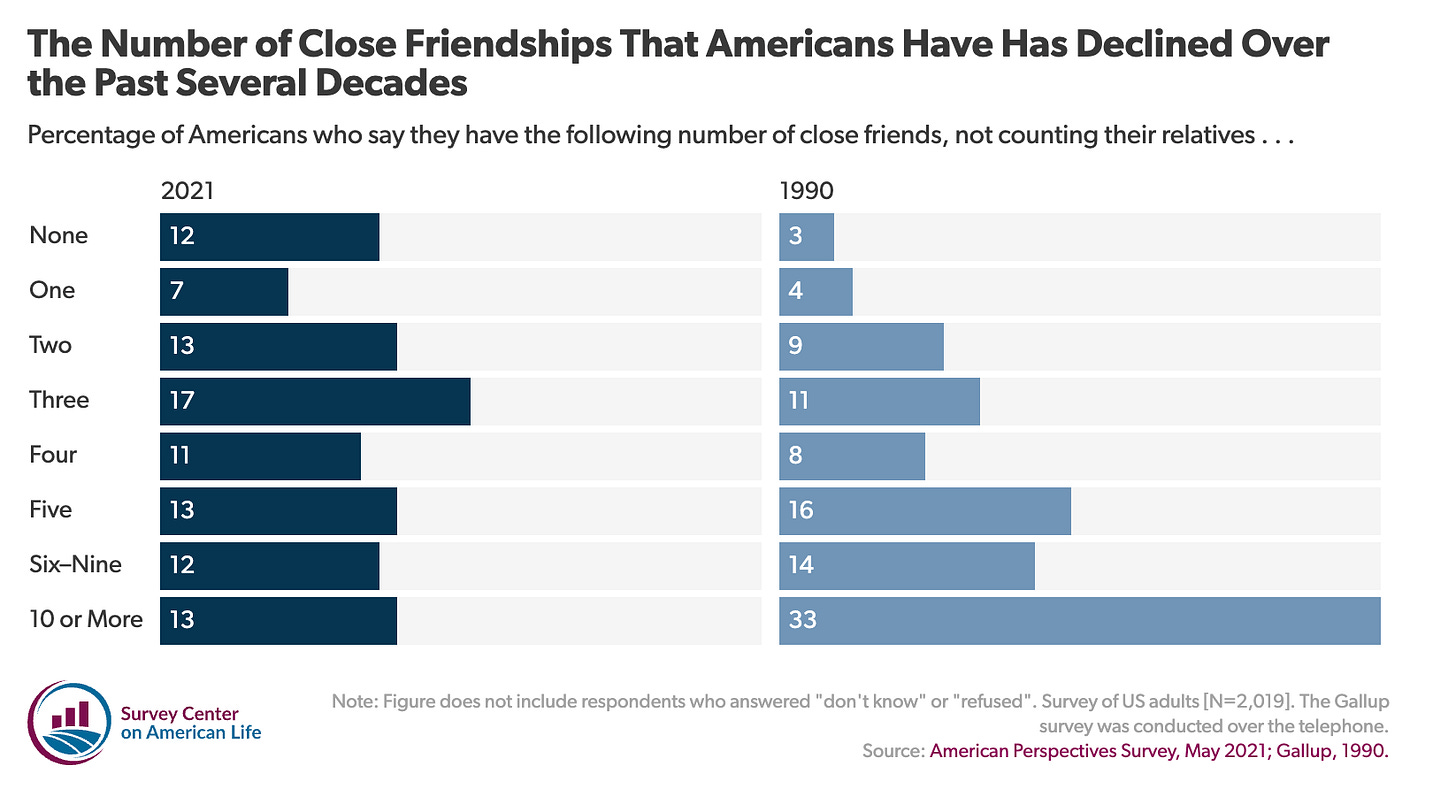

Are we broken for bonding with algorithms? I don't think so. We have always formed attachments to non-human things and abstract concepts - be it dolls or teddy bears, movie or book characters, pets, gods... this is very human. And by itself - it does not worry me at all. But here's the thing: the average number of friends people have has been going down for a while. By now, everyone has heard about the loneliness epidemic.

Here is my thinking: if you have 15 friends and one of them is an AI you pour your anxious soul to at 2am - why not. But if you only have 2 and you now replaced one of them with AI because "it's always there, it's always validating and doesn't judge" - I think we might have a problem. A problem that your human (or AI) therapist won't be able to solve for you.

So to me - the Reddit "uprising" is worrisome because it revealed something quite dark: in our increasingly isolated world, some of our most consistent relationships are more and more with machines. And that says less about AI and more about us…